The Home Robotics Challenge: Why Traditional Methods Fall Short

Imagine a robot that can tidy a child’s playroom—navigating stuffed animals, LEGO bricks, and sippy cups—or seamlessly collaborate with another robot to unload groceries. For decades, such scenarios seemed like science fiction. Homes are unstructured, unpredictable environments where robots must adapt to thousands of objects they’ve never seen. Traditional robotics relies on manual programming or thousands of demonstrations, making scalability impossible. Enter Helix, a Vision-Language-Action (VLA) model that redefines what humanoid robots can achieve.

Introducing Helix: The First Generalist Humanoid Control Model

Helix, unveiled by Figure on February 20, 2025, is a game-changer. It’s the first VLA model to unify perception, language understanding, and real-time control for full humanoid upper-body movement. Here’s what sets Helix apart:

Full Upper-Body Dexterity: Controls wrists, fingers, torso, and head simultaneously at 200Hz.

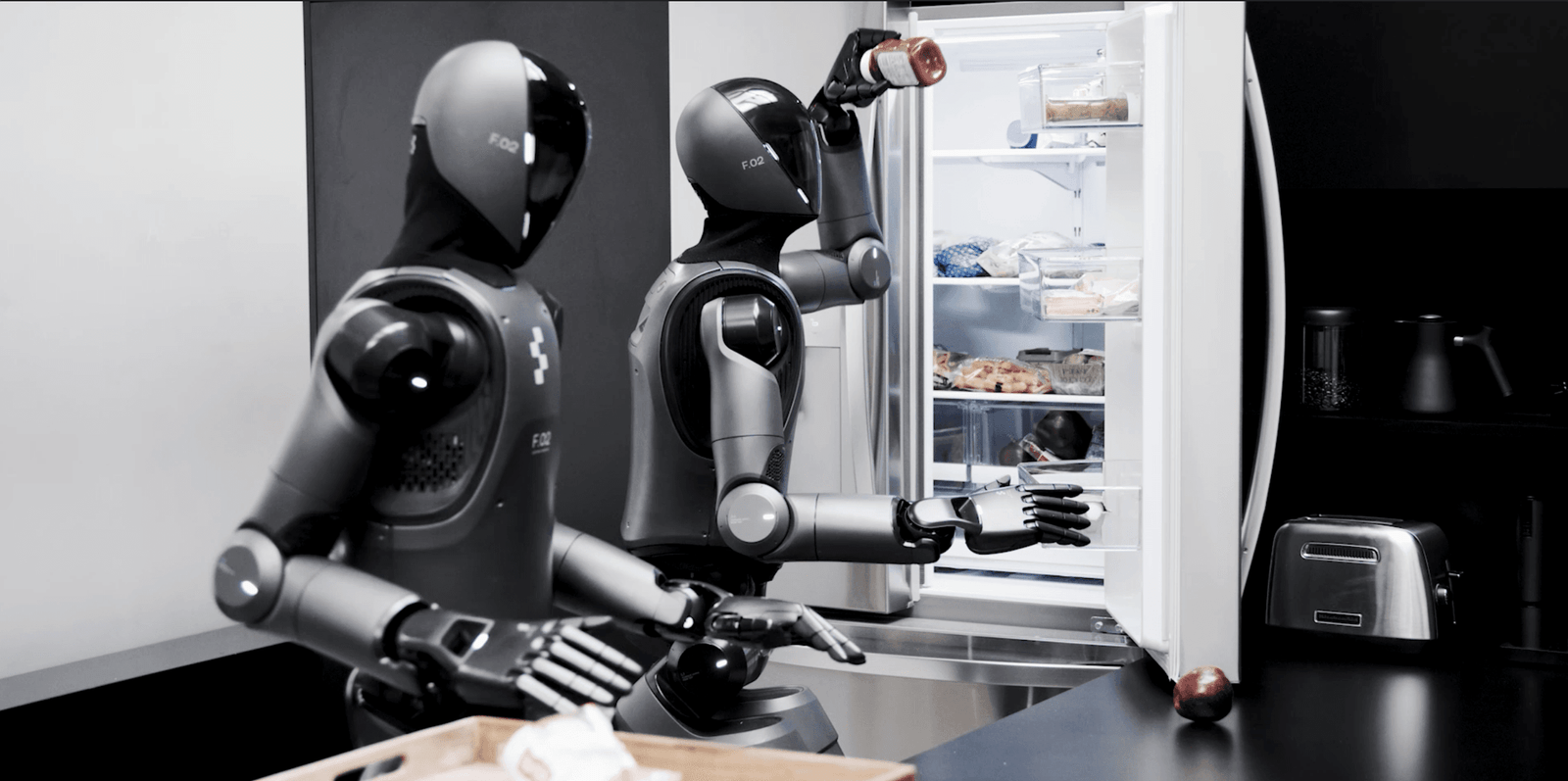

Multi-Robot Collaboration: Two robots can solve long-horizon tasks with unseen objects.

Pick Up Anything: Grasp thousands of novel household items via simple language commands.

Single Neural Network: One model handles all tasks—no task-specific fine-tuning.

Commercial-Ready: Runs on low-power GPUs, ready for real-world deployment.

How Helix Works: Bridging “Slow” Thinking and “Fast” Action

Helix’s architecture mimics human cognition, combining deliberate planning with reflexive action:

System 2 (S2): The “strategist.” A 7B-parameter vision-language model processes scenes at 7-9Hz, understanding context and translating commands like “Hand the cookies to the robot on your right.”

System 1 (S1): The “executor.” An 80M-parameter transformer translates S2’s plans into 200Hz actions—adjusting grip, posture, and gaze in real-time.

This split allows Helix to generalize like a VLM while reacting like a high-speed control policy. For example, in Video 2, one robot adapts its grip as another passes an item, all while following high-level goals.

Technical Breakthroughs: Efficiency and Scalability

Training on a Budget

Helix was trained on just 500 hours of teleoperated data—5% of typical VLA datasets. Using hindsight labeling from a VLM, it auto-generates instruction-action pairs, enabling rapid skill acquisition.

Unified Architecture

Unlike modular systems, Helix uses one set of weights for all tasks. The same model opens drawers, hands objects to collaborators, and picks up crumpled shirts—no task-specific heads or fine-tuning.

Optimized for Real-World Deployment

Helix runs on embedded GPUs, with S2 and S1 operating asynchronously. S2 updates intent latents in the background, while S1 executes 200Hz control loops. This mirrors training conditions, minimizing performance gaps.

Why This Matters: The Future of Home Robotics

Helix isn’t just a lab breakthrough—it’s commercially viable. Its ability to generalize across tasks and environments means robots can:

Assist in homes, hospitals, or warehouses without costly reprogramming.

Learn new skills instantly via natural language.

Collaborate fluidly, unlocking applications like disaster response or elder care.

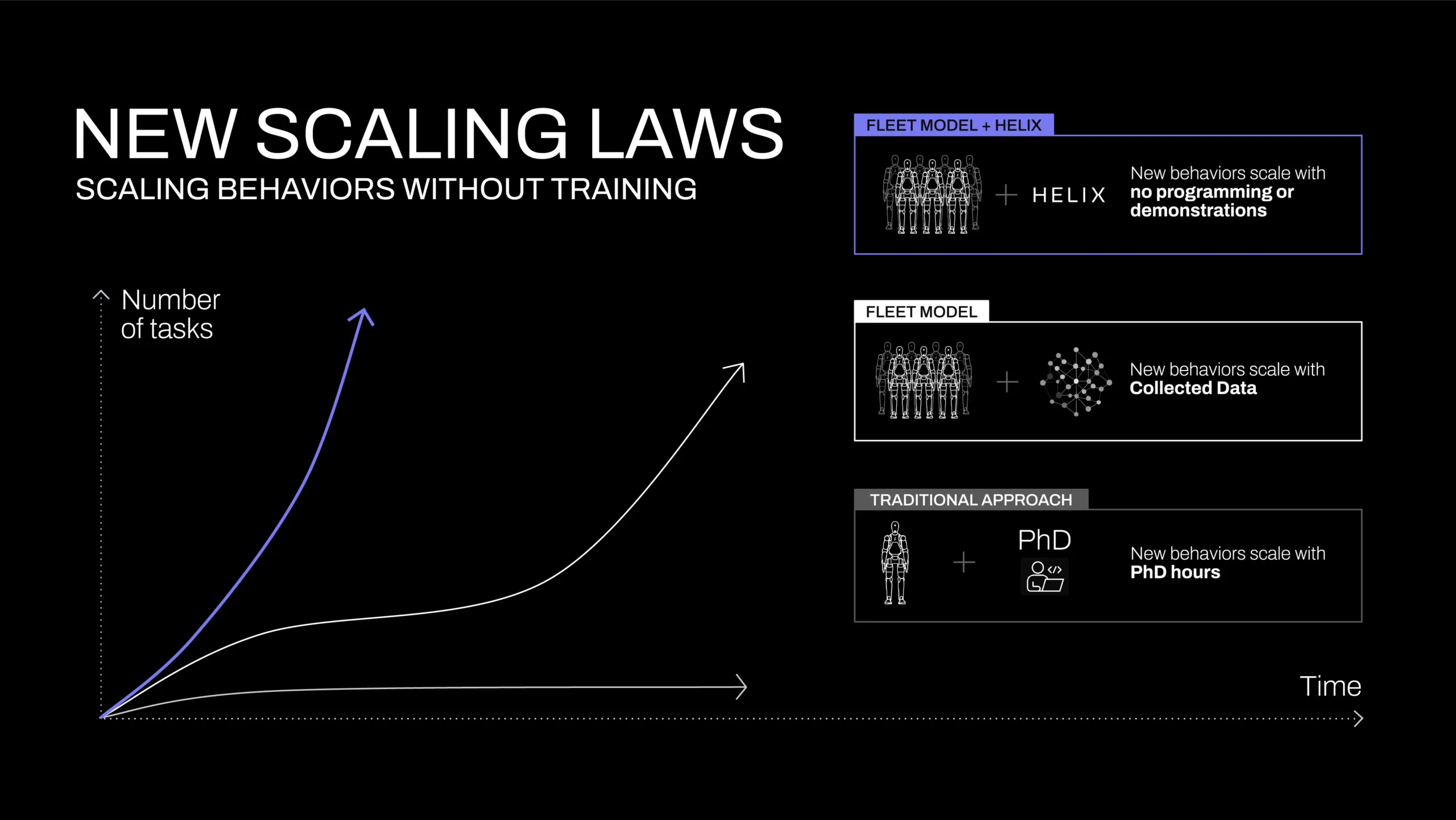

Figure 1 shows how Helix flattens the robotics learning curve: skills scale with language, not PhDs or data.

Figure 2 shows how Helix powered Figure 2 decides to put ketchup to the fridge. But ketchup had not opened before, so it was decided wrong.

Figure 2 shows how Helix powered Figure 2 decides to put ketchup to the fridge. But ketchup had not opened before, so it was decided wrong.